官方文档 英文版:Air-Gap Install 离线安装

安装RKE2的执行程序 下载二进制包 从GitHub Releases 下载对应版本的离线文件,这里以v1.29.7+rke2r1为例:

1 2 3 4 5 mkdir /home/v1.29.7+rke2r1 && cd /home/v1.29.7+rke2r1/curl -OLs https://github.com/rancher/rke2/releases/download/v1.29.7%2Brke2r1/rke2-images.linux-amd64.tar.zst curl -OLs https://github.com/rancher/rke2/releases/download/v1.29.7%2Brke2r1/rke2.linux-amd64.tar.gz curl -OLs https://github.com/rancher/rke2/releases/download/v1.29.7%2Brke2r1/sha256sum-amd64.txt curl -sfL https://get.rke2.io --output install.sh

复制离线文件 复制镜像压缩包到/var/lib/rancher/rke2/agent/images/目录下

1 2 mkdir -p /var/lib/rancher/rke2/agent/images/cp rke2-images-*.tar.* /var/lib/rancher/rke2/agent/images/

安装执行程序rke2 这一步在所有的节点上都要执行。

避坑指南 :在官方的文档中,这一步叫做“安装RKE2”,这有一点歧义和迷惑性。事实上,这一步只是把rke2.linux-amd64.tar.gz里的rke2执行程序和一些脚本解压出来进行了复制和设置执行权限,没有启动任何进程,也没有进行kubernetes集群的安装!

1 2 3 4 5 6 7 8 9 10 11 INSTALL_RKE2_ARTIFACT_PATH=/home/v1.29.7+rke2r1 sh install.sh ls -l /usr/local/bin/rke2*ls -l /usr/local/lib/systemd/system/rke2*systemctl status rke2-server.service systemctl status rke2-agent.service

至此,节点的准备已经完成,后续就是按照节点规划,Master节点就启动rke2-server服务,Worker节点就启动rke2-agent服务。

启动RKE2-Server(安装Kubernetes的Master节点) 这一步才是Kubernetes集群的安装。

配置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 mkdir -p /etc/rancher/rke2cat > /etc/rancher/rke2/registries.yaml << EOF mirrors: "*": endpoint: - "http://your_registry_host_ip:5000" EOF cat > /etc/rancher/rke2/config.yaml << EOF token: your_token tls-san: host_ip_of_master_node1 node-taint: - "CriticalAddonsOnly=true:NoExecute" system-default-registry: "your_registry_host_ip:5000" control-plane-resource-requests: kube-apiserver-cpu=50m,kube-apiserver-memory=256M,kube-scheduler-cpu=50m,kube-scheduler-memory=128M,etcd-cpu=100m EOF

在上述config.yaml中,设置了control-plane-resource-requests,即控制面资源请求,参考控制平面组件资源请求/限制 。kube-apiserver,kube-scheduler,kube-controller-manager,kube-proxy,etcd,cloud-controller-manager,其默认值可以用kubectl describe pod kube-apiserver-your_hostname -n kube-system查看。这些参数的调整,只影响当前主机,重启rke2-server服务后生效。

启动 1 2 3 4 5 systemctl enable rke2-server.service systemctl start rke2-server.service

验证 1 2 3 4 5 journalctl -u rke2-server -f /var/lib/rancher/rke2/bin/kubectl --kubeconfig /etc/rancher/rke2/rke2.yaml get nodes

简化工具的使用(rke2-server) RKE2没有像k3s一样把kbuectl等工具加到全局PATH,默认的配置文件位置也不对,需要手动指定。

1 2 3 4 5 6 7 8 export PATH=/var/lib/rancher/rke2/bin:$PATH export KUBECONFIG=/etc/rancher/rke2/rke2.yamlexport CRI_CONFIG_FILE=/var/lib/rancher/rke2/agent/etc/crictl.yamlexport CTRD_ADDRESS=/run/k3s/containerd/containerd.socksource ~/.bashrc

ctr是containerd的命令行工具,用于与 containerd 容器运行时进行交互。crictl是kubernetes的标准接口,用于与 Container Runtime Interface (CRI) 兼容的容器运行时进行交互。

其他Master节点 Master节点的数量要求是奇数(1,3,5…),后续Master节点的配置里增加server参数,指向第一台Master节点,既可加入集群。

1 2 3 4 5 6 7 cat > /etc/rancher/rke2/config.yaml << EOF server: https://host_ip_of_master_node1:9345 token: your_token tls-san: host_ip_of_master_node1 system-default-registry: "your_registry_host_ip:5000" EOF

启动RKE2-Agent(安装Kubernetes的Worker节点) 配置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 mkdir -p /etc/rancher/rke2cat > /etc/rancher/rke2/registries.yaml << EOF mirrors: "*": endpoint: - "http://your_registry_host_ip:5000" EOF cat > /etc/rancher/rke2/config.yaml << EOF server: https://host_ip_of_master_node1:9345 token: your_token tls-san: host_ip_of_master_node1 system-default-registry: "your_registry_host_ip:5000" EOF

启动 1 2 3 4 5 6 7 8 systemctl enable rke2-agent.service systemctl start rke2-agent.service journalctl -u rke2-agent -f

简化工具的使用(rke2-agent) 1 2 3 4 5 6 7 alias ctr='/var/lib/rancher/rke2/bin/ctr --address /run/k3s/containerd/containerd.sock --namespace k8s.io' alias crictl='CRI_CONFIG_FILE=/var/lib/rancher/rke2/agent/etc/crictl.yaml /var/lib/rancher/rke2/bin/crictl' source ~/.bashrc

无法从私有仓库拉取镜像? 可能跟私有仓库上镜像的tag有关,以registry.k8s.io/kube-state-metrics/kube-state-metrics:v2.13.0为例:

1 2 3 4 5 6 7 8 9 docker pull registry.k8s.io/kube-state-metrics/kube-state-metrics:v2.13.0 docker tag registry.k8s.io/kube-state-metrics/kube-state-metrics:v2.13.0 your_registry_host_ip:5000/kube-state-metrics/kube-state-metrics:v2.13.0 docker push your_registry_host_ip:5000/kube-state-metrics/kube-state-metrics:v2.13.0

在k8s的node上直接pull、tag(不得已的办法):

1 2 crictl pull your_registry_ip:5000/kube-state-metrics/kube-state-metrics:v2.13.0 ctr image tag your_registry_ip:5000/kube-state-metrics/kube-state-metrics:v2.13.0 registry.k8s.io/kube-state-metrics/kube-state-metrics:v2.13.0

Prometheus监控

Kube-prometheus-stack(包含Grafana和AlertManager的全家桶): prometheus-community

安装(kube-prometheus-stack): 1 2 3 4 5 6 7 wget https://github.com/prometheus-community/helm-charts/releases/download/kube-prometheus-stack-62.3.1/kube-prometheus-stack-62.3.1.tgz tar -xzvf *.tgz cd kube-prometheus-stackhelm install my-prometheus . -n monitoring --create-namespace

卸载 1 2 helm list -n monitoring helm uninstall my-prometheus -n monitoring

Grafana的使用 登录 在k8s的Node上进行端口转发,如果k8s在内网上,还需要借助XShell这样的终端工具配置隧道,然后在终端机上用浏览器访问Grafana。

1 kubectl port-forward svc/prometheus-grafana 3000:80 -n monitoring

账号:admin/prom-operator,密码配置在helm values.yaml中。

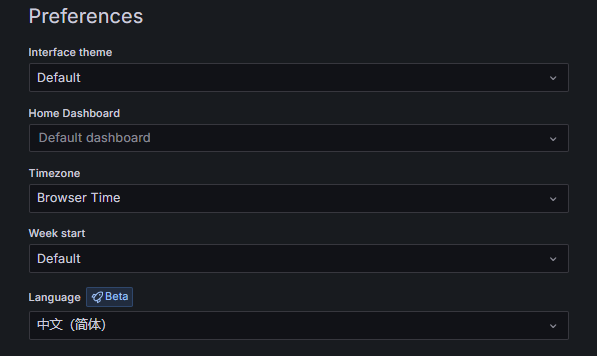

设置语言和时区 登录后在右上角的头像下有个Profile菜单,可以设置语言和时区。

保存要点下面的save按钮,不然会触发页面刷新,反而把添的配置给丢失了。

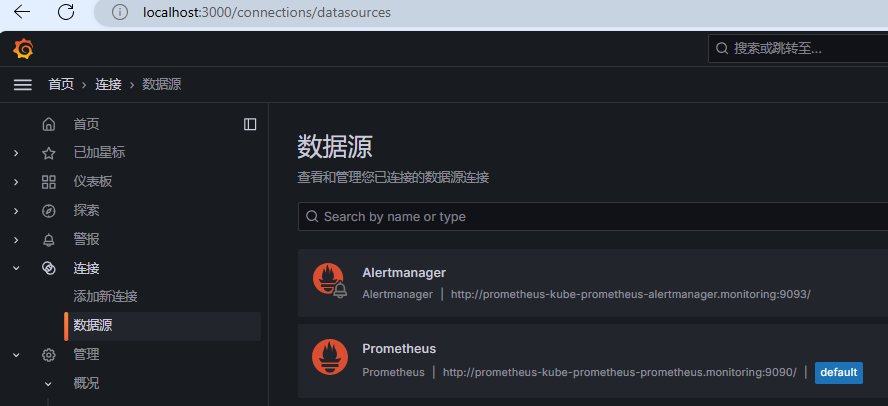

数据源 默认已经配置好了数据源(AlertManager和Prometheus),如下图所示:

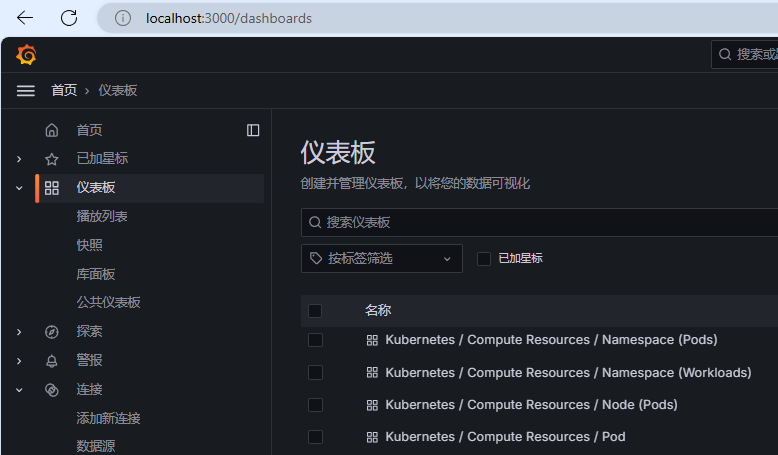

仪表板 点击左侧的仪表板菜单,可以看到已经内置了很多预建的仪表板,可以按需选择,点击名称即可查看。

这是Kubernetes/Compute Resources/Node (Pods)